When Your CRM Reports Look Perfect But Your Decisions Keep Failing

Organizations evaluate CRM reporting through visual design and feature breadth, but miss the critical metric: report confidence decay. A report with 90% accuracy at go-live can degrade to 45% confidence in 18 months as business rules drift from report logic—yet the dashboard still renders perfectly, creating false certainty that drives million-dollar decisions based on fiction.

The quarterly business review starts at 9 AM. Your CFO opens the CRM dashboard—clean charts, trending lines, conversion funnels color-coded by performance tier. Product A shows 34% quarter-over-quarter growth. Product B sits at 12%. The decision writes itself: double down on A, reallocate budget from B.

Six months later, the finance team runs an audit. Product A's actual revenue? Down 8%. Product B? Up 41%. The CRM dashboard still shows the same confident numbers. No error messages. No warnings. Just beautifully rendered graphs pointing in the wrong direction.

This isn't a story about bad data. It's a story about invisible decay.

## The Confidence You Didn't Earn

When your CRM vendor demos their reporting module, they show you dashboards built on pristine sample data. Every field populated. Every relationship mapped. Every calculation verified. You see a system that transforms raw customer interactions into strategic clarity.

What they don't show you is the half-life.

Reports don't break loudly. They degrade quietly. A sales process changes—someone adds a new pipeline stage without updating the conversion logic. A product line launches—the revenue attribution model doesn't account for bundled deals. An integration gets patched—the API field mapping shifts by one column. Each change shaves a few percentage points off your report's accuracy. But the dashboard still renders. The charts still update. The executive summary still populates on schedule.

You're flying blind with a working altimeter.

## Why "Looking Professional" Became Your Validation Standard

Most organizations evaluate CRM reporting through a selection committee. Sales wants pipeline visibility. Marketing wants attribution tracking. Finance wants revenue forecasting. IT wants integration compatibility. Everyone agrees the dashboards need to "look good."

So you assess:

- **Visual design**: Can we customize colors and layouts? - **Feature breadth**: How many chart types does it support? - **Drill-down capability**: Can executives click through to details? - **Export options**: Does it output to PDF and Excel?

What you don't assess:

- **Confidence interval**: What's the margin of error on this forecast? - **Data lineage**: Which source systems feed this metric? - **Staleness indicator**: When was this calculation last validated? - **Decay rate**: How fast does accuracy degrade after a schema change?

The vendor's demo data has 100% accuracy because it was hand-crafted for the demo. Your production data—shaped by six years of process evolution, three CRM migrations, and forty-two "quick fixes"—has accuracy that compounds downward with every aggregation layer.

Consider a report that pulls from three data sources:

- **Customer records**: 85% accurate (duplicate entries, outdated addresses) - **Transaction history**: 78% accurate (manual entry errors, integration gaps) - **Product catalog**: 92% accurate (discontinued items still flagged as active)

When you aggregate these into a "Customer Lifetime Value by Product Category" dashboard, you're not averaging the accuracy—you're multiplying the risk:

0.85 × 0.78 × 0.92 = 61% confidence

Add a six-month time lag (business rules changed, but the report logic didn't), and you're making strategic decisions on data that's closer to a coin flip than a compass.

But the dashboard doesn't tell you that. It shows you a bar chart with two decimal places of precision.

## The Three Stages of Report Decay (And Why You Only See One)

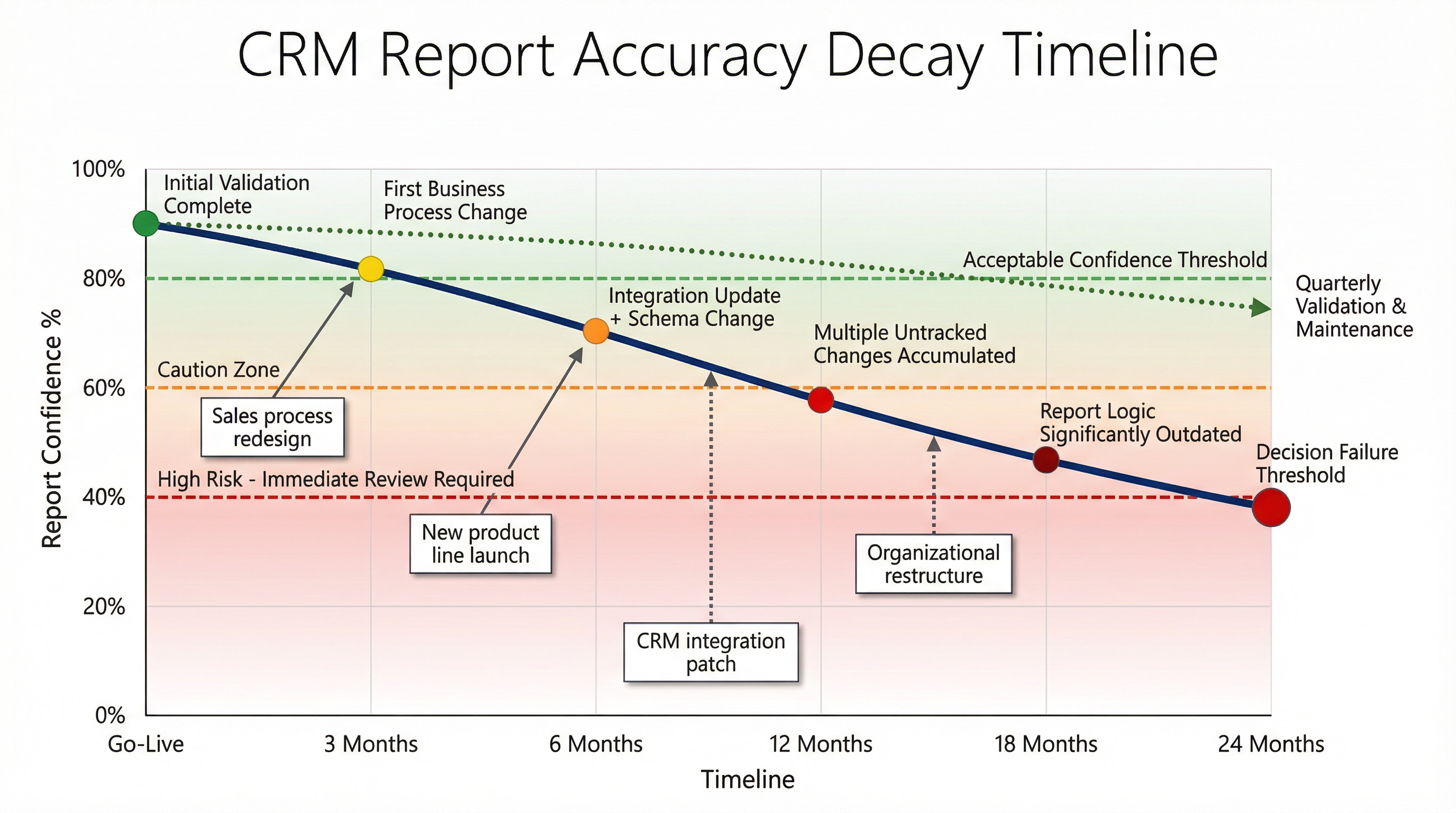

### Stage 1: Creation Confidence

Your CRM implementation partner builds the reports during go-live. They map every field, test every calculation, and validate outputs against your legacy system. The reports match. You sign off. Everyone celebrates.

At this moment, your reports might actually be 90% accurate. You've earned that confidence through rigorous validation.

But you've also started the clock.

### Stage 2: Silent Degradation

Three months post-launch, your sales team requests a new deal stage: "Verbal Commitment." It sits between "Proposal Sent" and "Contract Signed." The admin adds it to the pipeline. The reports keep running.

Except now your "Deals in Negotiation" metric is undercounting—because the calculation still uses the old stage list. Your forecast model assumes deals move from Proposal to Contract in 14 days. With the new stage, the real cycle is 22 days. Your pipeline coverage report—built on that 14-day assumption—is now telling you that you're 30% ahead of target when you're actually 15% behind.

No error message. No warning. Just a slow drift from "mostly right" to "dangerously wrong."

Six months in, your marketing team launches a new lead source: "Partner Referral." They add it to the dropdown. The attribution report keeps running—but it's still allocating 100% of revenue across the old five sources. Partner Referrals get bucketed into "Other," which your exec dashboard filters out as noise.

You're now making budget decisions based on a report that's missing 18% of your actual lead volume.

Twelve months in, your finance team reclassifies three product SKUs from "Hardware" to "Subscription" for revenue recognition purposes. The product dimension table updates. The sales reports don't. Your "Hardware Revenue Trend" chart shows a 40% decline. Your CEO asks why the hardware business is collapsing. It's not—you're just measuring a category that no longer exists.

At no point does the CRM send you an alert that says, "Warning: This report's assumptions are now 18 months out of date."

### Stage 3: Decision Failure

The decay becomes visible only when the decision fails. You reallocate headcount based on a territory performance report—and discover six months later that the top territory was actually underperforming, but a data mapping error was crediting it with deals from two other regions. You cut a product line based on a profitability analysis—and learn in the post-mortem that the cost allocation model was still using pre-merger overhead rates.

By the time you see the problem, you've already paid for it.

## The Compounding Cost of Aggregate Confidence

Here's the mechanism most procurement teams miss: **report confidence doesn't degrade linearly—it compounds downward with every layer of aggregation.**

Imagine you're building a "Win Rate by Industry" executive dashboard. The data flow looks like this:

1. **Source: CRM opportunity records** (82% accurate—some deals logged in the wrong industry) 2. **Transformation: Industry grouping logic** (88% accurate—some industries mapped to legacy categories) 3. **Calculation: Win rate formula** (95% accurate—some closed-lost deals missing close dates) 4. **Presentation: Dashboard aggregation** (90% accurate—some filters exclude edge cases)

Your final confidence isn't 82%—it's 0.82 × 0.88 × 0.95 × 0.90 = **61.4%**.

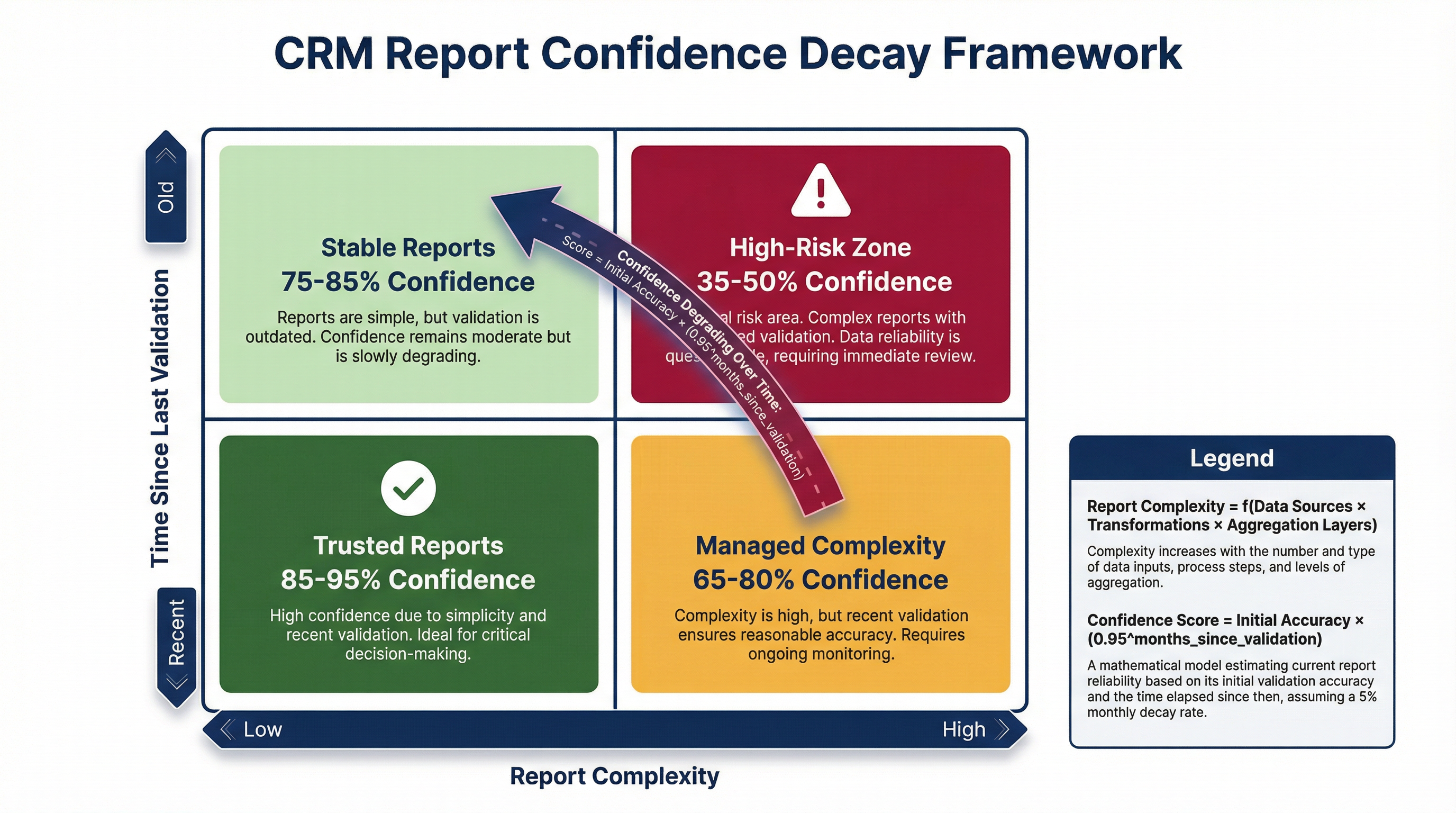

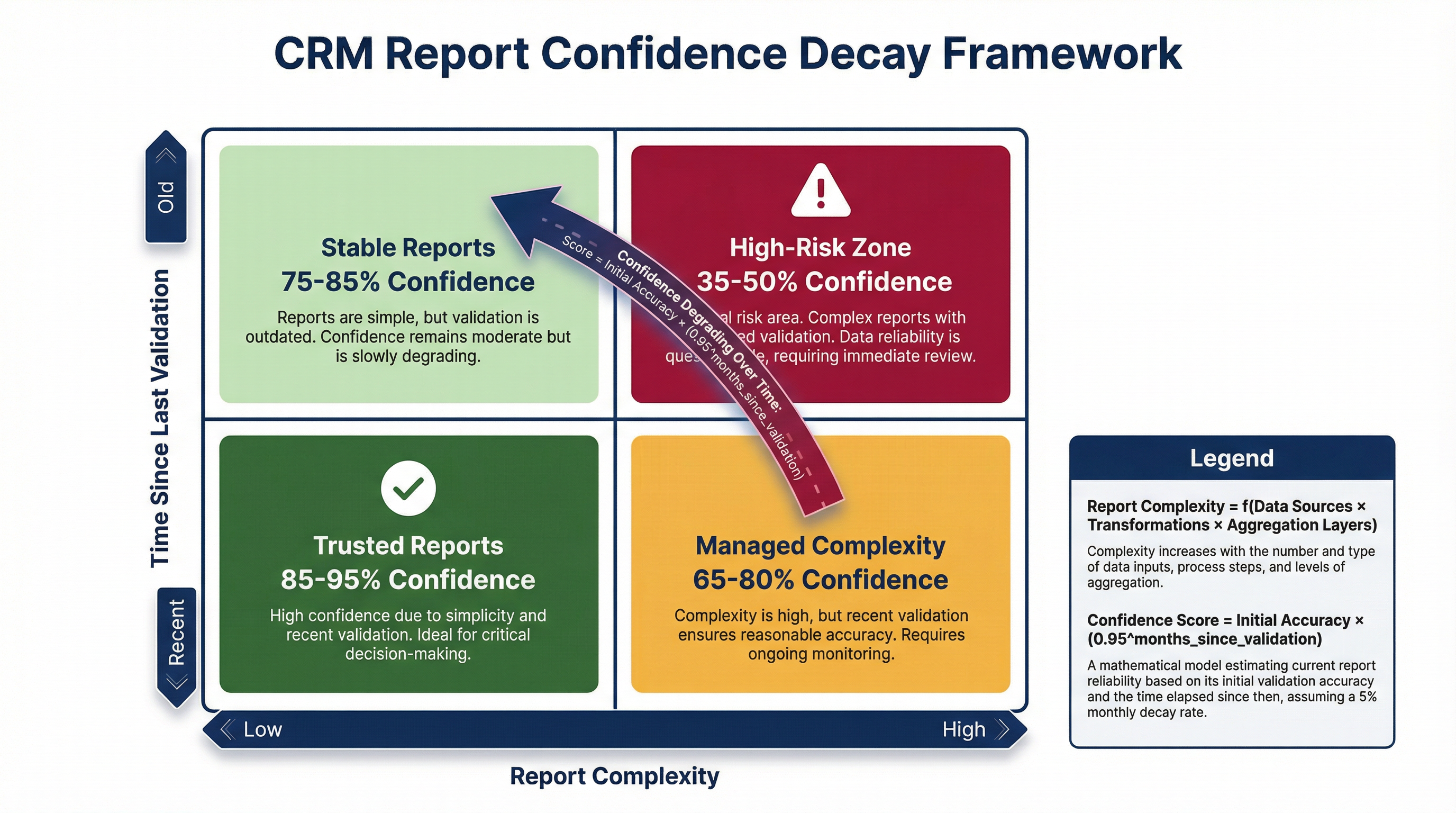

Now add time decay. Every month that passes without re-validating the report, accuracy drops by roughly 3-5% as business rules drift from report logic. After six months, your 61% confidence is now 61% × 0.95⁶ = **45.8%**.

You're making million-dollar decisions on a report that's barely better than a guess.

But when you open the dashboard, you see:

- **Healthcare: 34% win rate** (displayed with a green up-arrow) - **Manufacturing: 28% win rate** (displayed with a red down-arrow) - **Financial Services: 41% win rate** (displayed with a gold star)

No confidence intervals. No data quality scores. No "last validated" timestamp. Just numbers that look authoritative because they're rendered in a professional font.

## What "Report Confidence Monitoring" Actually Requires

Most organizations treat report validation as a one-time go-live activity. You test the reports during implementation, sign off, and move on. The assumption is that if the report worked correctly on Day 1, it will keep working correctly on Day 500.

That assumption is wrong.

Maintaining report confidence requires treating reports like code in production—because that's what they are. Every report is a set of assumptions encoded in SQL, formulas, and filter logic. When the underlying data changes, those assumptions break. But unlike application code, reports don't throw errors when they break—they just return wrong answers.

A sustainable report confidence program includes:

### 1. Data Lineage Documentation

For every metric on every dashboard, you need to be able to answer:

- Which source tables feed this calculation? - What transformations are applied? - What business rules are embedded in the logic? - When was this logic last reviewed?

If your team can't produce this documentation in under five minutes, your reports are already decaying.

### 2. Staleness Indicators

Every report should display:

- **Last validation date**: When was this report's logic last checked against current business rules? - **Data freshness**: When was the underlying data last updated? - **Confidence score**: What's the estimated accuracy based on data quality metrics?

If your executives are making decisions based on a report that was last validated 18 months ago, they should see that timestamp before they see the chart.

### 3. Automated Drift Detection

When a field gets added to your CRM, when a picklist value changes, when a workflow rule updates—your reporting system should flag every report that might be affected. Not as an error, but as a "needs review" alert.

Most CRMs don't do this. They assume that if the query runs without throwing an error, the output is correct. That's like assuming your car is safe to drive because the engine starts.

### 4. Periodic Re-Validation Cycles

Every quarter, a cross-functional team (sales ops, finance, IT) should review your top 20 reports and ask:

- Do the business rules embedded in this report still match how we actually operate? - Have any data sources changed in ways that would affect this calculation? - If we ran this report's logic against a known test case, would it return the expected result?

This isn't a "nice to have" governance activity. It's the only way to prevent your reports from drifting into fiction.

## Why This Matters More Than Your CRM's Feature Count

When you're evaluating CRM platforms, the vendor will show you a feature matrix. System A has 47 pre-built reports. System B has 89. System C has "unlimited custom dashboards."

None of that matters if you can't trust the numbers.

A CRM with 10 reports that maintain 85% confidence over two years is infinitely more valuable than a CRM with 100 reports that decay to 50% confidence in six months. But procurement teams don't ask about confidence decay rates. They ask about chart types and export formats.

The result: organizations spend six figures on reporting tools, then make decisions based on spreadsheets exported from the CRM and manually corrected by analysts who "know the data is wrong but can't explain why."

If your team is doing that, your reporting system isn't a decision support tool—it's a liability with a dashboard.

## What to Do If Your Reports Are Already Decaying

If you're reading this and thinking, "We definitely have this problem," here's the triage sequence:

### Immediate (This Week)

Identify your top 5 decision-critical reports—the ones that drive budget allocation, headcount planning, or product strategy. For each one, answer:

- When was this report's logic last validated against current business rules? - What data sources feed it, and have any of those sources changed in the past 6 months? - If we spot-checked 10 records, would the report's calculation match a manual calculation?

If you can't answer those questions, stop using the report until you can.

### Short-Term (This Month)

Build a "report confidence scorecard" for your top 20 reports. For each report, assign:

- **Data quality score** (0-100): Based on completeness, accuracy, and freshness of source data - **Logic staleness score** (0-100): Based on how long it's been since the calculation logic was reviewed - **Usage risk score** (Low/Medium/High): Based on the business impact of decisions made using this report

Any report with a confidence score below 70 or a High usage risk should trigger an immediate review.

### Long-Term (This Quarter)

Implement a report governance process:

- **Ownership**: Every report has a named owner responsible for accuracy - **Review cadence**: Critical reports reviewed quarterly, standard reports reviewed annually - **Change triggers**: Any CRM schema change, process change, or integration update triggers a review of affected reports - **Confidence indicators**: All reports display last-validation date and estimated confidence score

This isn't about perfection. It's about visibility. If your executives know they're making a decision based on a report with 65% confidence, they'll seek additional validation. If they think they're working with 95% confidence when it's actually 65%, they'll make bad decisions with false certainty.

## The Real Cost of Invisible Decay

The direct cost of report decay is measurable: wrong decisions, missed opportunities, wasted budget. But the indirect cost is worse.

When your team stops trusting the CRM, they build shadow systems. Spreadsheets. Access databases. Slack channels where people share "the real numbers." You end up with multiple versions of the truth, none of them authoritative, all of them maintained manually.

Your CRM becomes a data graveyard—technically functional, but operationally useless. You're paying for a system that nobody trusts, while your team wastes hours every week reconciling conflicting reports.

And the worst part? The CRM vendor's support team can't help you. Because from their perspective, the system is working perfectly. The queries run. The dashboards load. The exports generate. The fact that the numbers are wrong isn't a bug—it's a configuration issue. And configuration is your responsibility.

## The Question You Should Have Asked During Selection

When you were evaluating CRM platforms, the vendor demo probably included a slide about "robust reporting and analytics." They showed you colorful dashboards. They talked about "real-time insights" and "data-driven decision making."

Here's the question you should have asked:

"How does your system help me maintain report confidence over time as my business rules change?"

If the answer was anything other than "Here's our data lineage tracking, here's our automated drift detection, here's our confidence scoring framework," you bought a reporting tool that will decay into unreliability—and you won't know it until your decisions start failing.

The good news: it's not too late to fix it. But it requires treating report confidence as a first-class operational metric, not an afterthought.

Because a beautiful dashboard that's wrong is worse than no dashboard at all. At least when you don't have data, you know you're making decisions based on judgment. When you have a dashboard that looks authoritative but is quietly decaying, you're making decisions based on fiction—and calling it strategy.