Why Your CRM Trial Failed Before It Even Started

Most CRM trials fail not because of the software, but because organizations treat the 14-day window as "free" when it's actually a $2,500-$5,000 bandwidth investment requiring 50-100 team hours. Learn why proper trial preparation determines CRM selection success.

# Why Your CRM Trial Failed Before It Even Started

Most organizations discover their CRM choice was wrong six months after purchase, not during the trial period. The evaluation felt thorough at the time—features were tested, stakeholders gave feedback, pricing seemed reasonable. Yet within half a year, adoption stalls, workarounds proliferate, and the search begins again.

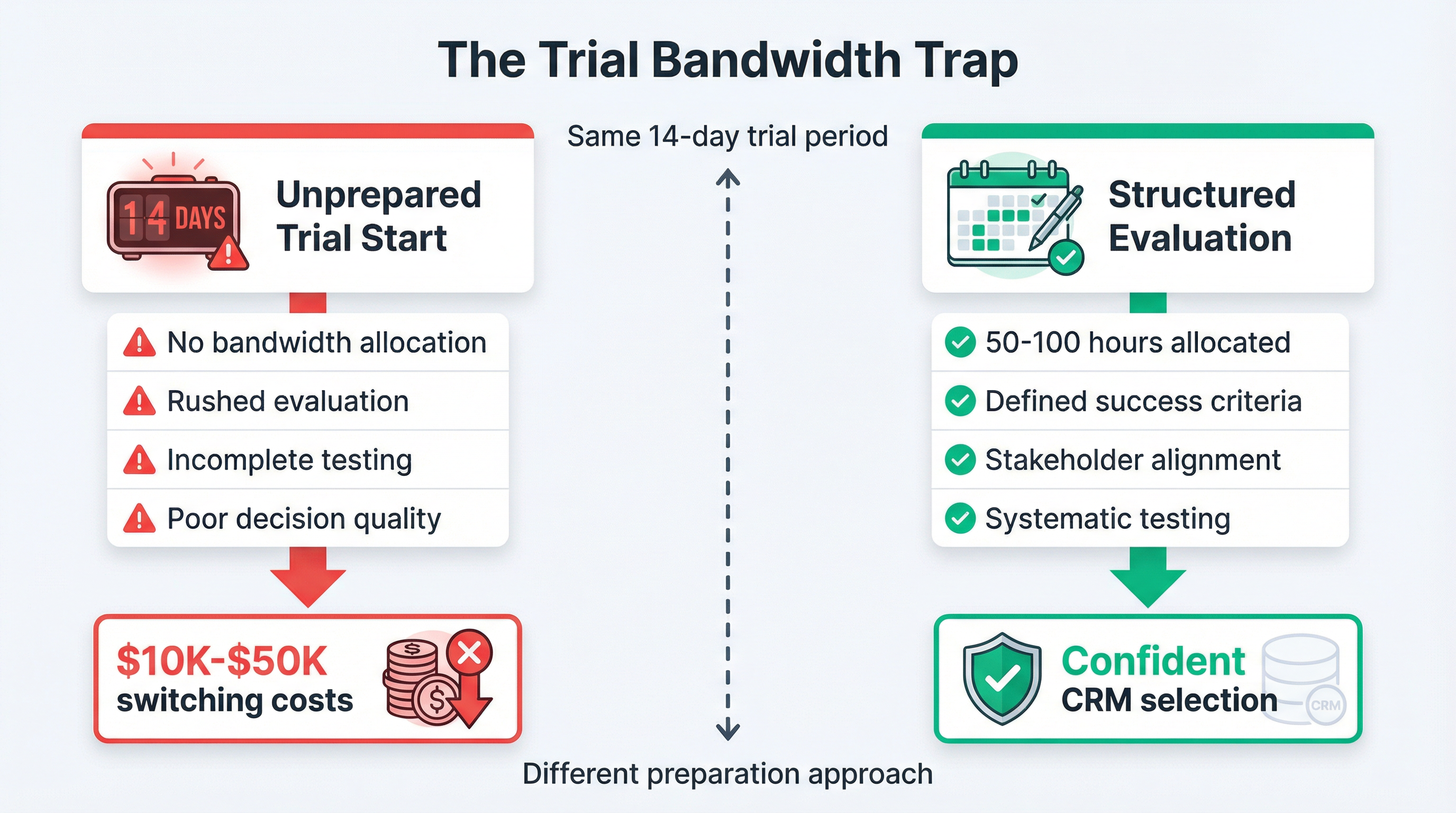

The failure point isn't the CRM itself. It's the trial period structure that creates an illusion of evaluation while systematically preventing proper assessment. Companies treat the 14-day window as a "try before you buy" opportunity when it actually functions as a bandwidth-bounded proof-of-concept that most organizations are structurally unprepared to execute.

## The Free Trial That Costs $5,000

When a CRM vendor offers a "free 14-day trial," the software license fee is waived. But the trial isn't free—it's a time-bounded organizational commitment that consumes resources most companies never budget for.

A proper CRM evaluation for a five-person sales team requires approximately 50 to 100 hours of collective effort. The IT manager spends 10 to 20 hours planning the evaluation framework, defining success criteria, coordinating stakeholders, and synthesizing feedback. Each team member invests 5 to 10 hours learning the interface, testing workflows, and documenting friction points. Coordination overhead—scheduling alignment meetings, consolidating input, presenting findings—adds another 3 to 5 hours.

At a conservative $50 per hour average labor cost, this "free" trial actually costs $2,500 to $5,000 in organizational bandwidth. If the rushed evaluation leads to a poor CRM selection, the subsequent switching costs—data migration, retraining, productivity loss, vendor negotiation—compound to $10,000 to $50,000 or more.

Organizations that don't recognize this bandwidth requirement upfront enter trials unprepared. The 14-day countdown begins, but no one has cleared their calendar. Testing happens in fragmented 20-minute windows between meetings. Stakeholder feedback arrives piecemeal. The decision gets made based on whoever had time to click around, not on systematic evaluation of business-critical workflows.

## When Evaluation Bandwidth Becomes the Bottleneck

The trial period doesn't measure CRM capability—it measures organizational evaluation capacity. A CRM with 200 features can't be properly assessed if the team only has bandwidth to test 20. A pricing model with tier-based limits can't be validated if no one has time to project future growth scenarios. Integration requirements can't be verified if IT is too busy firefighting production issues to review API documentation.

This bandwidth constraint creates a predictable failure pattern. Organizations start trials when someone finally gets approval to "look at CRM options," not when the team actually has capacity to evaluate properly. The trial clock starts ticking immediately, regardless of whether the organization is ready. Evaluation happens in whatever time remains after existing commitments, which is rarely sufficient for thorough assessment.

The result is evaluation theater—going through the motions of testing without the depth required for confident decision-making. Features get checked off a list without validating whether they solve actual workflow problems. Stakeholders provide feedback based on 30-minute demos rather than week-long usage patterns. Pricing gets compared on a spreadsheet without modeling how costs scale as the team grows.

When the trial expires, the decision isn't "Is this the right CRM?" but rather "Do we have enough information to avoid looking incompetent?" The answer is usually no, but the organizational pressure to make progress forces a choice anyway. The CRM gets purchased, adoption struggles, and six months later everyone wonders why the evaluation didn't catch these obvious problems.

## The Structural Mismatch Between Trial Design and Evaluation Reality

CRM vendors design trial periods to optimize conversion rates, not evaluation quality. A 14-day window creates urgency—long enough to explore key features, short enough to prevent overthinking. The trial-to-paid conversion rate for most SaaS products ranges from 1% to 10%, meaning 90% of trials fail to convert. Vendors accept this ratio because the marginal cost of providing trial access is near zero, making it profitable even with high failure rates.

But for the evaluating organization, the cost structure is inverted. The bandwidth investment is front-loaded and fixed—those 50 to 100 evaluation hours must be spent regardless of whether the CRM ultimately gets purchased. A failed trial doesn't return that time. It's a sunk cost that now must be repeated with the next vendor, compounding the total evaluation expense.

This creates a perverse incentive structure. Vendors benefit from frictionless trial starts because volume compensates for low conversion. Organizations need high-friction trial starts—formal readiness assessments, bandwidth allocation planning, success criteria definition—to ensure evaluation quality. The market has optimized for the former while systematically ignoring the latter.

The 14-day countdown timer is particularly problematic. It creates artificial time pressure that forces decisions before proper evaluation completes. Organizations that request trial extensions often get them, but few ask because it feels like admitting failure. The trial period becomes a test of organizational speed rather than CRM suitability, rewarding companies that can mobilize quickly over those that evaluate thoroughly.

## What Proper Trial Preparation Actually Requires

Organizations that successfully evaluate CRM software don't start trials when they get vendor access—they start weeks earlier with internal preparation. The trial period is the execution phase of an evaluation plan that was designed before any software was touched.

Proper preparation begins with defining evaluation success criteria. What specific workflows must this CRM support? What adoption rate would indicate the interface is intuitive enough? What integration points are non-negotiable versus nice-to-have? These questions get answered before the trial starts, creating a clear framework for what to test and how to measure results.

Bandwidth allocation happens next. Who needs to participate in the evaluation, and how much time can they realistically commit? If the answer is "everyone will test it when they have time," the evaluation will fail. Effective trials require dedicated time blocks—two hours per week for each participant, with specific testing assignments and feedback deadlines.

Stakeholder alignment ensures that everyone who can veto the decision participates in the evaluation. The sales manager who will use the CRM daily has different priorities than the IT director who must maintain it or the CFO who must approve the budget. If any of these perspectives are missing during the trial, they'll surface as objections after purchase, when changing course is far more expensive.

The trial period itself becomes structured execution. Day 1: onboarding and initial setup. Days 2-4: individual feature testing against defined criteria. Days 5-7: team workflow validation. Days 8-10: integration and data migration testing. Days 11-13: stakeholder feedback consolidation. Day 14: decision meeting with documented findings. This structure ensures comprehensive evaluation within the time constraint rather than hoping thorough testing happens organically.

Organizations that follow this approach don't experience trial failures—they experience confident decisions. The CRM either meets the pre-defined criteria or it doesn't. If it doesn't, the trial ends with clear documentation of why, which informs the next evaluation. If it does, the purchase decision is backed by systematic evidence rather than gut feel.

## The Hidden Cost of Skipping Preparation

Companies that skip trial preparation don't save time—they defer it. The questions that should have been answered before the trial get asked during implementation, when changing course is exponentially more expensive. The workflows that should have been validated during the trial get discovered as broken six months after go-live, when the team has already invested hundreds of hours in data entry.

The bandwidth that wasn't allocated for proper evaluation gets consumed anyway, just spread across a longer timeline and mixed with the frustration of using a poorly-matched tool. The stakeholder alignment that didn't happen before purchase becomes a series of escalating complaints after deployment. The success criteria that weren't defined upfront become moving goalposts that the CRM can never satisfy.

This deferred evaluation cost compounds over time. A rushed trial leads to a poor CRM choice, which leads to low adoption, which leads to workarounds, which leads to data fragmentation, which leads to reporting failures, which leads to business decisions made on incomplete information. The original $5,000 bandwidth investment that was "saved" by skipping proper trial preparation becomes a $50,000 operational drag that persists until the organization finally commits to re-evaluating and switching vendors.

The switching cost itself is rarely just the new CRM's price. It includes data migration labor, retraining time, productivity loss during transition, parallel system operation, vendor negotiation, and the opportunity cost of what the team could have accomplished if they weren't managing a CRM replacement project. Organizations that have been through this cycle once become far more willing to invest in proper trial preparation the second time around.

## Reframing the Trial as an Investment Decision

The shift from "free trial" to "bandwidth-bounded proof-of-concept" changes how organizations approach CRM evaluation. Instead of asking "Should we try this CRM?" the question becomes "Do we have the bandwidth to evaluate this CRM properly, and is the potential value worth that investment?"

Sometimes the answer is no. If the team is already underwater with existing commitments, starting a CRM trial is setting up for failure. The evaluation will be rushed, incomplete, and ultimately unreliable. It's better to delay the trial until bandwidth is available than to waste time on an evaluation that can't produce a confident decision.

When the answer is yes, the trial becomes a structured project with allocated resources, defined deliverables, and clear success criteria. The 14-day window isn't a countdown timer creating pressure—it's a focused sprint where everyone knows their role and what needs to be accomplished. The evaluation produces documentation that supports the purchase decision and informs implementation planning.

This reframing also changes how organizations interact with vendors. Instead of passively accepting a 14-day trial period, they negotiate evaluation terms that match their bandwidth reality. Some vendors offer extended trials for organizations that demonstrate serious evaluation intent. Others provide structured evaluation frameworks that reduce the planning burden. The key is recognizing that the trial period is a negotiable parameter, not a fixed constraint.

Organizations that treat CRM trials as bandwidth investments rather than free opportunities make better decisions. They evaluate fewer options more thoroughly rather than many options superficially. They enter trials prepared and exit with confidence. They avoid the six-month regret cycle that comes from rushed evaluation. And they recognize that the most expensive CRM isn't the one with the highest license fee—it's the one chosen without proper assessment.

Understanding [what CRM software actually does](https://flowccrm.com/articles/what-is-crm-software) provides the foundation for defining evaluation criteria, but the trial period is where theoretical understanding meets operational reality. The bandwidth invested in that evaluation determines whether the CRM becomes a productivity multiplier or an expensive distraction.